I’ve recently had the occasion to setup an iSCSI over DRBD highly-available active-passive cluster, using Corosync for the messaging bus between the nodes and Pacemaker as the cluster manager. The cluster was comprised of multiple DRBD resources, most of them being iSCSI logical units, or LUNs.

Although I won’t go into detail on how to setup DRBD and iSCSI in a highly available cluster, as those are very well documented at Linbit, creators of DRBD, in their “Highly available DRBD and iSCSI with Pacemaker” Tech Guide (free account required), I will still walk-through a few gotchas I ran into while setting up my cluster.

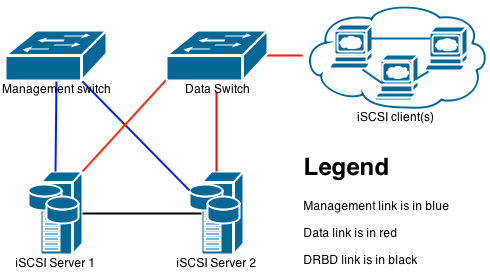

For the sake of simplicity, I’ll refer to the iSCSI targets as the iSCSI servers, and iSCSI Initiators as the iSCSI clients. The simplified network diagram is as follows.

Using multiple network links

As you can notice, the setup uses multiple Ethernet network links is to segregate different types of traffic, in order to avoid congestion and, essentially, maximize throughput. In this example, we’ll use three links, each with it’s own permanently assigned private IP address.

One of the links is dedicated to iSCSI traffic, called the data link. The second is for DRBD traffic, we’ll call it the DRBD link. The last one is used for monitoring and management access, as well as a way to reach the default gateway, so let’s name it management link.

You want to make sure that each of those links go through completely independent networks, especially for the management and DRBD links. In my case, the DRBD link is a direct connection between the iSCSI servers.

To optimize data transfer rates, we also use jumbo frames of more or less 9 000 bytes on our data and DRBD links, as opposed to the standard Ethernet frame size of 1 538 bytes. The reasoning behind it is that, by default, the smallest file you store on a file system is of 4KiB and that all files sizes are a multiple of that size (this is called the allocation unit). By using bigger frames, you make sure that the smallest file you can store doesn’t get split into multiple frames.

The management interface doesn’t require any particular configuration for optimization, as there will not be any service running on it that will provide huge amounts of data.

Advantages of using jumbo frames

To demonstrate the advantage of using jumbo frames, I’ll use a standard TCP/IP packet as an example, as both DRBD and iSCSI uses the same protocols at that level, and a file of 4KiB (4 096 bytes).

The standard frame

A standard Ethernet frame has a size of 1 538 bytes, called the Maximum Transfer Unit or MTU, including 78 bytes of headers for each one. In this example, this leaves us with 1 460 bytes to fit our file. Since the file doesn’t completely fit, we have to divide it in smaller parts. Since we don’t want data loss, and we can’t have half a frame for the headers, we round the number of parts up. This gives us the following formula :

$$\frac{File size}{Frame size - Header size}$$

When we apply the formula to our 4KiB file, we get an amount of 2,81 parts. This means we send 2 full Ethernet frames, and a third one with a smaller amount of data. Since we already know that we fit 1 460 bytes of data in our standard frame, let’s count how much data we have left in the third packet.

$$remainder = 4096-(1460\times2)$$

This leaves us with 1 176 bytes of data in our last frame, to which we’ll add the 78 bytes of headers. This gives us a frame of 1 254 bytes.

Now, let’s calculate the amount of data actually transferred for our 4KiB file. Our 2 full size Ethernet frames use 3 076 bytes of data, to which we add the 3rd frame of 1 254 bytes, giving a total of 4 330 bytes. It’s an increase of 5,7% over our original file size of 4 096 bytes. If we have to transfer a 1 000MB file, not accounting for allocation unit restrictions, we have to send 1 057MB worth of Ethernet frames.

The jumbo frame

For this example, our jumbo frames have a size of 9 000 bytes with the same header size as the standard frame, and the same 4KiB (4 096 byte) file.

If we remove the 78 bytes worth of headers from our Ethernet frame, we have 8 922 bytes left for our file. Since our file is smaller than the amount of space left to transfer it, we can simply add the header size to our file size, thus transferring a total of 4 174 bytes. This amounts in an increase of 1,9% over the original file, which is way better than the 5,7% increase we had with the standard frames.

When you configure jumbo frames on your network links, make sure that all the network equipment, including network cards and switches, support this option. Also, ensure that your Linux distribution and the network driver support that option. Usually, you’ll find that information in the documentation that came with your hardware and software. It may be shown as jumbo frame, large frames, configurable MTU, etc.

DRBD

I strongly suggest that you make sure that your DRBD resources are properly initialized, and working before you start configuring Corosync. This will avoid hard to diagnose errors when you’ll configure your cluster management software.

Analyze your system logs, usually in #!text /var/log/syslog, to make sure

that you don’t have any split-brain warnings. If you do, make sure they

are fixed. I suggest that you read the DRBD manual on how to recover

from

those.

Also, when you are finished doing the setup of your resources, make sure you turn off all the resources on your two iSCSI servers, with the primary server being stopped last.

This can be done using the following commands on every resource (I have, for this example, resources r0, r1 and r2) :

drbdadm secondary r0 r1 r2 # You want to demote the resource to avoid writes

drbdadm down r0 r1 r2 # Stop the DRBD resource

iSCSI Target service

When you configure your iSCSI Target service, avoid configuring your LogicalUnits, or LUNs. They will be automatically added by Corosync, when it sets the server as active.

Corosync

When you configure Corosync, you’ll want to make sure it uses two network connections to communicate between the two storage servers. By using doing so, we make sure that Corosync is still able to see the other server even when one of the links is completely down. This allows us to avoid a DRBD split-brain situation, avoiding a possible loss of data.

In our example, we’ll be running the Corosync cluster on the management and DRBD links. Those links were chosen because the management link has a very small flow of traffic, where the DRBD one is a direct connection between both servers.

Note that I am using Ubuntu LTS 12.04.5, as configuration settings have changed a little in more recent versions of Corosync, but the theory stays the same. To instruct Corosync to use two network interfaces, we have to create a ring for each interface and change the Redundant Ring Protocol, or RRP, mode.

There are three available RRP modes : none, active and passive. In none mode, the protocol offers no redundancy, which is not appropriate in our case. In passive mode, Corosync will use the first ring and when it fails, it will use the second ring, whereas the active mode uses all rings at the same time. Those last two are appropriate for our cluster, with a preference for the last one. There are a few considerations to take into account when you use the active and passive mode (from the RedHat Cluster Administration Manual):

- Do not specify more than two rings.

- Each ring must use the same protocol; do not mix IPv4 and IPv6.

- If necessary, you can manually specify a multicast address for the second ring. If you specify a multicast address for the second ring, either the alternate multicast address or the alternate port must be different from the multicast address for the first ring. If you do not specify an alternate multicast address, the system will automatically use a different multicast address for the second ring.

- If you specify an alternate port, the port numbers of the first ring and the second ring must differ by at least two, since the system itself uses port and port-1 to perform operations.

- Do not use two different interfaces on the same subnet.

- In general, it is a good practice to configure redundant ring protocol on two different NICs and two different switches, in case one NIC or one switch fails.

- Do not use the

#!shell ifdowncommand or the#!shell service network stopcommand to simulate network failure. This destroys the whole cluster and requires that you restart all of the nodes in the cluster to recover. - Do not use

#!text NetworkManager, since it will execute the#!shell ifdowncommand if the cable is unplugged. - When one node of a NIC fails, the entire ring is marked as failed.

- No manual intervention is required to recover a failed ring. To recover, you only need to fix the original reason for the failure, such as a failed NIC or switch.

In order to configure, your #!text /etc/corosync/corosync.conf file, you’ll

want to change the value of rrp_mode setting to either active

or passive. Then you define an interface block for each of

your network interfaces. Note that we use netmtu to enable jumbo

frames for Corosync on our DRBD link. It should look at something close

to this:

# The Redundant Ring Protocol mode

rrp_mode: active

# Management link

interface {

ringnumber: 0

bindnetaddr: 192.168.10.0

mcastaddr: 224.94.10.1

mcastport: 5405

}

# DRBD link

interface {

ringnumber: 1

bindnetaddr: 10.0.0.0

netmtu: 8982

mcastaddr: 224.94.11.1

mcastport: 5410

}

Once they are configured, you have to make sure that your services start

with your system. On Ubuntu LTS 12.04.5, you have to reconfigure the

system boot and shutdown order since, by default, it will try to stop

#!shell iscsitarget and #!shell corosync before stopping Pacemaker. This will cause

the system to hang when you try to reboot it. Do so by issuing the

following commands :

update-rc.d -f corosync remove

update-rc.d -f iscsitarget remove

update-rc.d pacemaker defaults

update-rc.d corosync start 19 2 3 4 5 . stop 40 0 1 6 .

update-rc.d iscsitarget start 18 2 3 4 5 . stop 45 0 1 6 .

Once your services have started, you can start to configure your cluster

services, using #!shell crm. Keep in mind that in a active-passive

configuration as the one I made, you want to have all your DRBD

resources on the same cluster node as your iSCSI LUNs and virtual IP

addresses. Also, don’t forget that you can’t use a

group with ms (master-slave) resources, but you

can use colocation to make sure they are always run on the same node

and order to make sure they start in the correct order, and you can

group everything else together. Also, remember that a group works like

a colocate and group together.

In order to avoid that limitation, what I’ve done, is that I’ve grouped all my iSCSI LUNs, my iSCSI Target and my virtual IPs in a group called DATA_GROUP. Then, I used colocate to make sure that all of them run off the same active server when it’s a DRBD master, and order so that my DATA_GROUP starts after DRBD is ready.

group DATA_GROUP ISCSI ISCSI_LUN_R0 ISCSI_LUN_R1 ISCSI_LUN_R2 VIRTUAL_IP

colocate DRBD_with_DATA inf: DRBD_R0_MS:Master DRBD_R1_MS:Master DRBD_R2_MS:Master DATA_GROUP

order DATA_after_DRBD inf: DRBD_R0_MS:promote DRBD_R1_MS:promote DRBD_R2_MS:promote DATA_GROUP:start

When you’re finished, make sure everything is started properly by using

#!shell crm_mon -r1f. If

you have any failed actions, check your system logs in order to sort

them out.

For every DRBD resource configured, you should have a Master entry and

a Slaves entry. If you have an offline with a name

like DRBD_R1:0, you most likely have a split-brain; check in your

system logs. If you really have a split-brain, you’ll have to put both

of your nodes offline by issuing the #!shell crm node offline, fix

the

split-brain,

stop your DRBD resources and then bring back your nodes online by using #!shell crm node online.

Test your stuff

Make sure to test all the applicable crash scenarios for your use case. Some of the tests I’ve done are:

- Cut the network links and make sure that the servers don’t split-brain ;

- Write to the iSCSI LUN and cut power to the nodes, which I partitioned and formatted on the iSCSI Client side. It should be close to transparent on the client side.

Don’t forget to document what you did, as it can get complex really fast, and have a good backup strategy. DRBD or iSCSI, by itself, is no replacement for a backup strategy, but can be used as a base layer for one.